Measuring Experimentation impact with holdouts

Read time: 6 minutes

Last edited: Dec 14, 2024

Overview

This guide explains how to create a holdout experiment to measure the overall effectiveness of your Experimentation program.

As you begin planning your Experimentation program, you may want to track how much of an impact your experiments have over time. Will there be any measurable differences in behavior between the end users you include in experiments, and those you do not? Which group of end users will spend more money, sign up for services, or affect other metrics at higher rates? Holdout groups can help you answer these questions.

Holdouts let you exclude a percentage of your audience from your Experimentation program. This enables you to see the overall effect of your experiments on your customer base, and helps determine how effective the experiments you're running are. If, after a set period of time, such as a month or quarter, there are no measurable differences between the two groups you may want to reconsider the number, scope, and design of the experiments you're running.

In this guide, you will:

- Decide on and create a metric

- Create the holdout

- Decide which experiments to add to the holdout

- Read your results

To learn more about LaunchDarkly's Experimentation offering, read Experimentation.

Prerequisites

To complete this tutorial, you must have the following prerequisites:

- An active LaunchDarkly account with Experimentation enabled, and with permissions to create flags and edit experiments

- Familiarity with LaunchDarkly's Experimentation feature

- A basic understanding of your business's needs or key performance indicators (KPIs)

Concepts

To complete this guide, you should understand the following concepts:

Decide on and create a metric

First, decide what metric you want to measure. Choose a metric that aligns with the same KPIs or goals you want to experiment on, such as average revenue per customer, or percentage of customers that sign up for your service.

In this example, you're in charge of your organization's growth program. You might be using a variety of metrics to measure things like total sign-ups, revenue per customer, or revenue per cart. Here, your main metric measuring the success of your organization's Experimentation program will be new, completed account sign-ups.

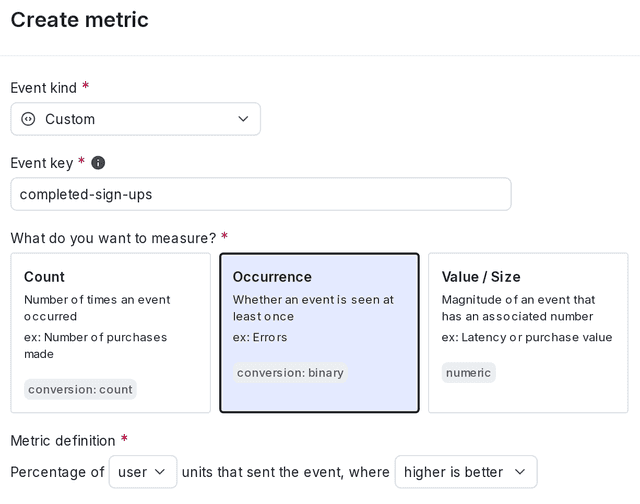

To create your metric:

- Navigate to the Metrics list.

- Click Create metric. The "Create metric" dialog appears.

- Select an event kind of Custom.

- Enter "completed-sign-ups" for the Event key.

- Choose Occurrence as what you want to measure.

- In the "Metric definition" section, select the following:

- Percentage of User units that sent the event

- where higher is better

- Enter "Completed sign-ups" as the metric Name.

- (Optional) Add a Description.

- (Optional) Add any Tags.

- (Optional) Update the Maintainer.

- Click Create metric.

Sending custom events to LaunchDarkly requires a unique event key. You can set the event key to anything you want. Adding this event key to your codebase lets your SDK track actions customers take in your app as events. To learn more, read Sending custom events.

LaunchDarkly also automatically generates a metric key when you create a metric. You only use the metric key to identify the metric in API calls. To learn more, read Creating and managing metrics.

You can create and add secondary metrics to your holdout if needed.

Create a holdout

Before you create a holdout, you must decide the following:

- How long to run the holdout for: in this example, you are running the holdout for a full quarter. You must create the holdout before you create any of the experiments you want to include within it, so be sure to create the holdout in advance of the time period you want to run it for.

- What percentage of your customer base to include in the holdout: in this example, you are holding out 1% of your audience.

To create the holdout:

- Navigate to the Holdouts list from the left sidenav.

- Click Create holdout. The "Holdout details" section appears.

- Enter a Name such as "Growth-related experiments."

- Enter a Description such as "Includes experiments related to our marketing campaigns and account sign-up process."

- Enter a Holdout amount. For this example you are holding out 1% of your audience.

- Click Next. The "Choose randomization unit and attributes" section appears.

- Select

useras the Randomization unit. - Click Next. The "Select metrics" section appears.

- Select your "Completed sign-ups" metric.

- Click Finish.

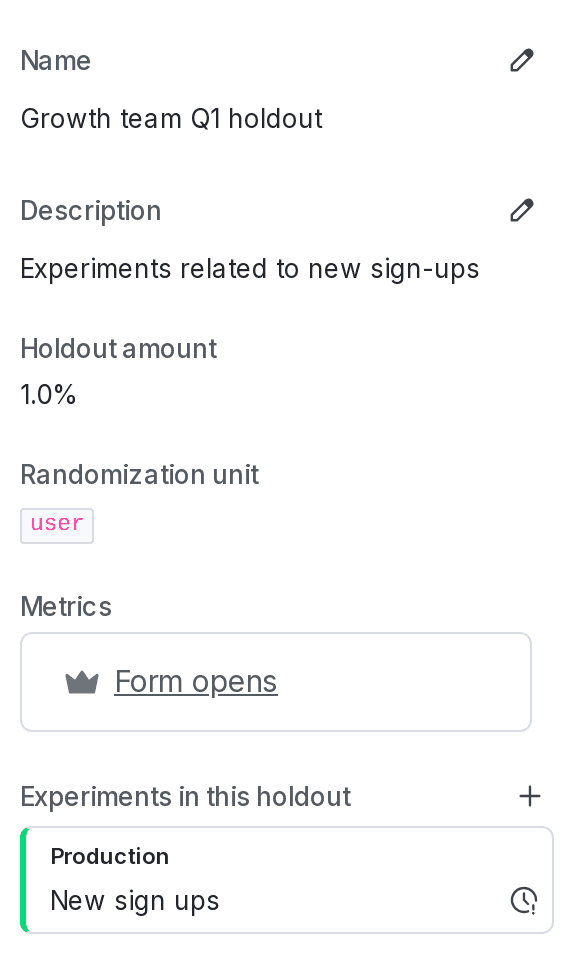

You are directed to the holdouts details page.

Here is what your holdout will look like:

Decide which experiments to add to the holdout

You can add experiments to your holdout when you create them. When deciding which experiments to add to a holdout, think about whether you want to measure the effectiveness of your Experimentation program as a whole, or only for a subset of your business.

In this example, you are adding only experiments related to front-end changes in your app such as pop-ups, different versions of copy, or different sign-up flows. You are not including experiments related to back-end changes that don't have a direct affect on how customers interact with your app.

To learn how to add experiments to your holdout when you create them, read Creating funnel optimization experiments and Creating feature change experiments.

Read the results

At the end of the quarter, you can analyze your holdout experiments to understand how much of an impact your Experimentation program is having on your chosen metrics.

On the holdout details page, the Probability report tab displays the results of the metric for two variations:

- "Not in holdout" includes any contexts included in an experiment within the holdout.

- "In holdout" includes the contexts that were excluded from experiments within the holdout.

You can see which variation is performing better if least one of the experiments in the holdout is running. However, we don't recommend making any decisions about your holdout experiment until all of the experiments within the holdout are finished and you have shipped winning variations for all of them. To learn how, read Winning variations.

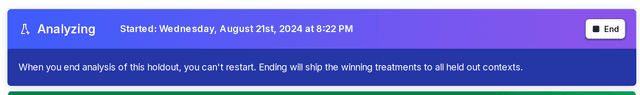

When you're confident you have recorded enough data, you can stop the holdout and analyze its results. To do this, navigate to the holdout details page and click End. The contexts that were in the holdout will no longer be excluded from future experiments.

You can then make a decision about the results of your holdout based on which variation is performing better:

- If the "Not in holdout" variation is performing better, then this means your experiments are overall having a positive impact on the metric.

- If the "In holdout" variation is performing better, then this means your experiments are overall having a negative impact on the metric, and you may want to examine the experiments you're running and the variations you're testing to figure out how you can build better or more effective experiments.

Conclusion

In this guide you learned how to create a holdout experiment using a prerequisite flag to measure the overall impact of your Experimentation program. By assessing the impact of your experiments as a whole, you can fine-tune your audiences and the metrics you're measuring, and ensure you're getting the most value out of LaunchDarkly Experimentation.

Your 14-day trial begins as soon as you sign up. Get started in minutes using the in-app Quickstart. You'll discover how easy it is to release, monitor, and optimize your software.

Want to try it out? Start a trial.