Managing AI model configuration outside of code with the Node.js AI SDK

Read time: 12 minutes

Last edited: Jan 16, 2025

Overview

This guide shows how to manage AI model configuration and prompts for an OpenAI-powered application in a runtime environment. It uses the LaunchDarkly Node.js (server-side) AI SDK and AI configs to dynamically customize your application.

Using AI configs to customize your application means you can:

- manage your model configuration and prompts outside of your application code

- enable non-engineers to iterate on prompt and model configurations

- apply updates to prompts and configurations without redeploying your application

This guide steps you through the process of working in your application and in LaunchDarkly to customize your application.

If you're already familiar with setting up AI configs in the LaunchDarkly UI and want to skip straight to the sample code, you can find it on GitHub.

If you're not at all familiar with AI configs and would like additional explanation, you can start with the Quickstart for AI configs and come back to this guide when you're ready for a more realistic example.

Prerequisites

To complete this guide, you must have the following prerequisites:

- a LaunchDarkly account, including

- a LaunchDarkly SDK key for your environment.

- an Admin role, Owner role, or custom role that allows AI config actions.

- a Node.js (server-side) application. This guide provides sample code in TypeScript. You can omit the types if you are using JavaScript.

- an OpenAI API key. The LaunchDarkly AI SDKs provide specific functions for completions for several common AI model families, and an option to record this information yourself. This guide uses OpenAI.

Example scenario

In this example, you manage a product recommendation system for an e-commerce platform. Using the LaunchDarkly Node.js (server-side) AI SDK, you'll configure AI prompts to provide personalized product suggestions based on your customers' preferences. You'll also track metrics, such as the number of output tokens used by your generative AI application.

Step 1: Prepare your development environment

First, install the required SDKs:

Then, set up credentials in your environment:

If you are starting from scratch, you can use the Node dotenv package in conjunction with a .env file to load your API keys.

Step 2: Initialize LaunchDarkly SDK clients

Inside of your project, create a shared utility for initializing the LaunchDarkly SDK and its AI client.

Create a config/launchDarkly.ts file:

Step 3: Set up AI configs in LaunchDarkly

Next, create an AI config in the LaunchDarkly UI. AI configs are the LaunchDarkly resources that manage model configurations and messages for your generative AI applications.

To create an AI config:

- In LaunchDarkly, click Create and choose AI config.

- In the "Create AI config" dialog, give your AI config a human-readable Name, for example, "chat-helper-v1" or "shopping assistant."

- Click Create.

Then, configure the model and prompt by creating a variation. Every AI config has one or more variations, each of which includes your AI messages and model configuration.

Here's how:

- In the create panel in the Variations tab, replace "Untitled variation" with a variation Name. You'll use this to refer to the variation when you set up targeting rules, below.

- Click Select a model and select a supported OpenAI model, for example, gpt-4o.

- Optionally, adjust the model parameters: click Parameters to view and update model parameters. In the dialog, adjust the model parameters as needed. The Base value of each parameter is from the model settings. You can choose different values for this variation if you prefer.

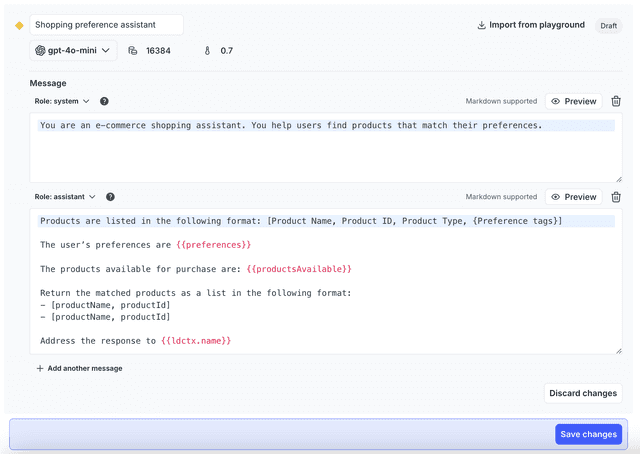

Next, add system, user, or assistant messages to define your prompt. In this example, you'll augment the prompt with saved user preferences.

Start with the system message:

Now, add an assistant message that pre-loads the model with the user's preferences and provides some instructions about the input format, response format, and context:

The double curly braces in the prompts allow you to augment the messages with customized data at runtime. To learn more, read Syntax for customization.

Within the UI, the variation will look like the following:

Click Save changes at the bottom of the page to save the configuration.

Step 4: Enable targeting in LaunchDarkly

Targeting is how you specify that specific end users working in your application will receive specific variations that you've created in your AI config.

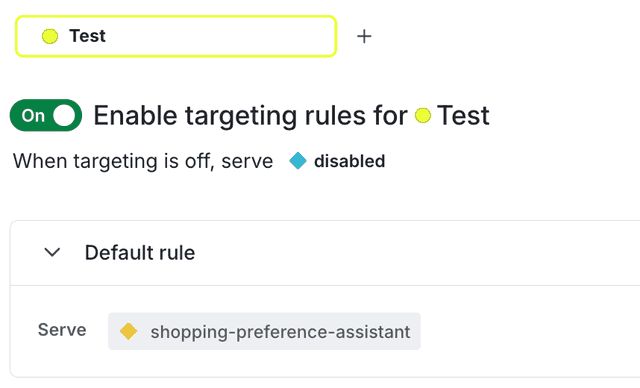

To set up targeting, click the Targeting tab. For now, you'll turn on targeting, and set the default targeting rule to serve the "Shopping preference assistant" variation you just created for your test environment.

To specify the AI config variation to use by default when the AI config is toggled on:

- Select the Targeting tab for your AI config.

- In the "Default rule" section, click Edit.

- Configure the default rule to serve the shopping preference assistant variation.

- Click Review and save. In the confirmation dialog, click Save changes.

By default, this AI config will now serve the variation shopping preference assistant variation:

Step 5: Integrate the AI config into your application

To integrate the LaunchDarkly AI config into your application, you'll need to set up your OpenAI client, set up your application data, add your completion code, and finally put it all together.

Set up the OpenAI client

Next, set up your OpenAI client. Following a similar pattern as above, create a config/openAi.ts file to initialize the OpenAI client:

Set up application data

Then, set up the data for your application. Normally, the product data that your application retrieves would come from a database. For this example, create a data/products.ts file to serve mock data:

Additionally, add the following data/mockProducts.ts file in the same folder:

The data contains the name, price, type, preference tags to match to a customer's preferences, a description, and a product ID. This should give the AI model ample data to match to a customer's query.

Add your completion

Next, add the AI Config and completion code into your application. Create a completions/shoppingAssistant.ts file:

Notice that you need to wrap the completion call in one of the provided methods from the LaunchDarkly SDK (trackOpenAIMetrics). This enables you to monitor the performance of your application, below.

Plugging it in

Now that you've set up the AI config and completion, you need to plug this into your app somewhere where you can get a response.

Here's an example:

With these parameters, run your application and you should get a response that looks like this:

You can play around with the preferences or the query passed in to see different results.

At this point, when you run your application, you receive a response from the model that has relevant results to customized to the input, but the formatting isn't customer-facing. If you were storing your prompts in code, this would require a code change to format differently. However, because you have it in an AI config, you can update the formatting without having to touch the code or redeploy the application.

Step 6: Update configurations dynamically

Next, let's adjust the formatting. You can do this without changing application code, or even requiring developer involvement. If any member of your team wants to edit the prompt, adjust the formatting or tone, or make any changes, they can do that directly from the LaunchDarkly UI.

In the LaunchDarkly UI, navigate to the Variations tab of the AI config you created earlier. You'll edit the existing variation to reflect the formatting you'd prefer for the output. You'll also update the system messaging to be a bit more friendly and less academic.

First, edit the existing variation and adjust the system message to the following:

Next, adjust the formatting on the assistant response format message to be more customer-facing, provide the result in more nicely-formatted Markdown, and provide a link to the product in the catalog:

With these parameters, run your application again:

and you should get a response that looks like this:

The output now contains a friendlier message, links to the products, and provides the descriptions in Markdown format. As long as you're providing the data in the completion input context, you can update this output to contain any of the input parameters without the need for code changes.

Bonus: Track LLM metrics

When you set up the OpenAI completion call, you wrapped it in a function called trackOpenAIMetrics. This function automatically captures metrics pertaining to the LLM calls for OpenAI:

After you've run your completion a few times, check the Monitoring tab for your AI config in the LaunchDarkly UI. The Monitoring tab displays metrics that are automatically tracked, including:

- Generation count

- Input tokens used

- Output tokens used

In combination with LaunchDarkly's targeting rules, you can duplicate the prompts with different models and messages to see the differences in their generation patterns.

Tracking success

You can also use the LaunchDarkly AI client to keep a running total of positive and negative sentiment about the prompt generation.

If you have a process that validates your prompts at runtime, you can use the following:

If you wait for customer interaction to capture whether the result was a positive or negative generation, you can instead call the SDK function asynchronously, by providing the same context to the configuration and tracking it:

Conclusion

In this guide, you reviewed how to manage AI model configuration and prompts for an OpenAI-powered application, and how to dynamically customize your application.

Using AI configs with the LaunchDarkly AI SDKs means you can:

- modify AI prompts and model parameters directly in LaunchDarkly

- empower non-engineers to refine AI behavior without code changes

- gain insights into model performance and token consumption

To learn more, read AI configs and AI SDKs.

Your 14-day trial begins as soon as you sign up. Get started in minutes using the in-app Quickstart. You'll discover how easy it is to release, monitor, and optimize your software.

Want to try it out? Start a trial.