Elastic (ELK) Stack

Read time: 5 minutes

Last edited: Dec 14, 2024

The Elastic Stack integration is only available to customers on select plans. To learn more, read about our pricing. To upgrade your plan, contact Sales.

Overview

This topic explains how to use the LaunchDarkly Elastic (ELK) Stack integration. The Elastic Stack, which is also referred to as the ELK Stack, is a versatile search platform with many use cases, from site and application search to observability and security.

LaunchDarkly's Elastic Stack integration supports log aggregation and data and timeseries visualization.

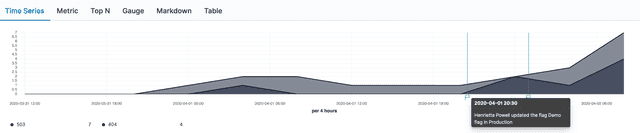

For example, an engineer is alerted to an anomaly in their application's behavior. With the LaunchDarkly Elastic Stack integration sending data to their observability stack, they can easily correlate flag changes atop timeseries and understand what changes happened just before the anomalous activity.

Development teams could also build data visualizations around their LaunchDarkly change history data to find out more about who interacts with or modifies their feature flags. You can even use Elasticsearch to find specific information in your logs.

Prerequisites

To configure the Elastic integration, you must have the following prerequisites:

-

Your Elasticsearch endpoint URL: This is the destination to which LaunchDarkly sends its data. It must include the socket number. If you are using the Elastic Search Service from Elastic Cloud, you can find your endpoint URL by clicking your deployment name and selecting Copy Endpoint URL just below the Elasticsearch logo.

-

API Key credentials: To learn more about getting an API key, read Elastic's API Key Documentation. Ensure this key gives permission to write to the appropriate Elasticsearch index.

-

Elasticsearch index: The Elasticsearch index indicates which index the LaunchDarkly data should be written to in your cluster. You can use the default or choose your own.

Configure the Elastic Stack integration

Here's how to configure the Elastic Stack integration:

- Navigate to the Integrations page and find "Elastic (ELK) Stack."

- Click Add integration. The "Create Elastic (ELK) Stack configuration" panel appears.

- (Optional) Enter a human-readable Name.

- Paste in the Elasticsearch endpoint URL.

- Paste in the Authentication token. This is the base64 encoding of

idandapi_key, joined by a colon. - Enter or use the default value for the Index to which you want to write your LaunchDarkly data.

- (Optional) Configure a custom policy to control which information LaunchDarkly sends to your Elastic cluster. To learn more, read Choose what data to send.

- After reading the Integration Terms and Conditions, check the I have read and agree to the Integration Terms and Conditions checkbox.

- Click Save configuration.

After you configure the integration, LaunchDarkly sends flag, environment, and project data to your Elastic cluster.

Choose what data to send

The "Policy" configuration field allows you to control which kinds of LaunchDarkly events are sent to Elasticsearch. The default policy value restricts it to flag changes in production environments:

You may want to override the default policy if you wanting to restrict the integration to a specific combination of LaunchDarkly projects and environments or to a specific action or set of actions.

In the example below, the policy restricts LaunchDarkly to only send changes from the web-app project's production environment to Elasticsearch:

Example payload

Expand the section below to view an example payload of LaunchDarkly data to Elastic Stack.

Expand Example payload

Send more data to Elasticsearch

Alternatively, you use the integration to monitor not just flag changes, but all environment and project changes. If you want to send absolutely everything to Elasticsearch, you must add policies for project and environment data:

To send more data to Elasticsearch:

- Navigate to the Integrations page and find the integration you wish to modify.

- and click Edit integration configuration. The "Edit Elastic (ELK) Stack configuration" panel appears.

- If the current policy is already expanded, click cancel in the lower-right. Otherwise click + Add statement under "Policy."

- Enter a statement for the resources you would like to send to Elasticsearch. For example,

proj/*will send all project data andproj/*:env/*will send all environment data from all projects. - Click Update.

- Repeat steps 3 and 4 for each additional category of data you'd like to send to Elasticsearch.

- When you've added all the policies you wish, click Save configuration.

To learn more about setting custom policies, read Policies in custom roles.

Use the integration with Kibana

Kibana is a front-end application that provides search and data visualization for data indexed in Elasticsearch.

After you configure the Elastic (ELK) Stack integration, your LaunchDarkly data streams to Elasticsearch and becomes available for searching. You can use Kibana to visualize the data Elastic (ESK) Stack receives.

You must configure your Kibana instance to retrieve this data and display it in its own visualizations or annotate your existing timeseries charts.

To make data available to Kibana, you must create a new index pattern:

- Log into your Kibana instance.

- Navigate to Settings in the left-hand navigation by clicking on the gear icon.

- Under Kibana, select Index Patterns.

- Click Create Index Pattern.

- Enter the appropriate pattern to refer to your LaunchDarkly index or indices. If you used the default value,

launchdarkly-auditwill match specifically that new index. Wildcards are supported, so you may want to end your pattern with*to support feature log index cycling. - Choose

datefor the Time Filter field name. - Click Create index pattern.

- Verify your data appears correctly by using the Discover section of Kibana and selecting your new index pattern.

Now you can use your LaunchDarkly data alongside everything else in Kibana.

Other resources

You can continue reading Elastic's documentation to learn more about these products:

- To learn more about visualizations in Kibana, read Kibana's documentation.

- To learn more about index patterns, read the Elastic Kibana guide.