Experimentation

Read time: 5 minutes

Last edited: Jan 14, 2025

Overview

This topic explains the concepts and value of LaunchDarkly's Experimentation feature. Experiments let you measure the effect of flags on end users by tracking metrics your team cares about.

About Experimentation

Experimentation lets you validate the impact of features you roll out to your app or infrastructure. You can measure things like page views, clicks, load time, infrastructure costs, and more.

By connecting metrics you create to flags in your LaunchDarkly environment, you can measure the changes in your customer's behavior based on what flags they evaluate. This helps you make more informed decisions, so the features your development team ships align with your business objectives. To learn about different kinds of experiments, read Experiment types. To learn about Experimentation use cases, read Example experiments.

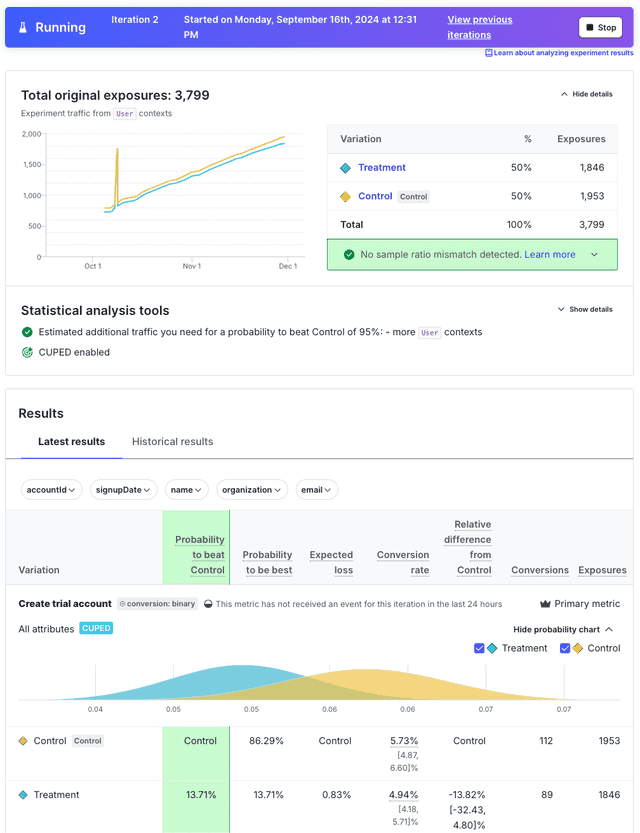

Here is an example of an experiment's Results tab:

Prerequisites

To use Experimentation, you must have the following prerequisites:

-

You must be using the listed version number or higher for the following SDKs:

Click to expand a table listing required client-side SDK versions

Client-side SDKs:

SDK Version .NET (client-side) 2.0.0 Android 3.1.0 C++ (client-side) 2.4.8 Electron All versions Flutter 0.2.0 iOS All versions JavaScript 2.6.0 Node.js (client-side) All versions React Native 5.0.0 React Web All versions Roku All versions Vue All versions Click to expand a table listing required server-side SDK versions

Server-side SDKs:

SDK Version .NET (server-side) 6.1.0 Apex 1.1.0 C/C++ (server-side) 2.4.0 Erlang 1.2.0 Go 5.4.0 Haskell 2.2.0 Java 5.5.0 Lua 1.0 Node.js (server-side) 6.1.0 PHP 4.1.0 Python 7.2.0 Ruby 6.2.0 Rust 1.0.0-beta.1 Click to expand a table listing required edge SDK versions

Edge SDKs:

SDK Version Cloudflare 2.3.0 Vercel 1.2.0 -

Your SDKs must be configured to send events. If you have disabled sending events for testing purposes, you must re-enable it. The all flags method sends events for some SDKs, but not others. For SDKs that do not send events with the all flags method, you must call the variation method instead. If you call the variation method, you must use the right variation type. To learn more about the events SDKs send to LaunchDarkly, read Analytics events.

-

You must configure your SDK to assign anonymous contexts their own unique context keys.

-

If you use holdouts and are using a client-side SDK, your minimum SDK versions may differ from those listed above. To find the minimum required version for holdouts, read Supported features.

-

If you use the LaunchDarkly Relay Proxy, it must be at least version 8, and you must configure it to send events. (The first version of Relay Proxy to support Experimentation was 6.3.0, however, that version is no longer supported.) If you use holdouts and are using a client-side SDK, the minimum required version of Relay Proxy is 8.10. To learn more, read Configuring an SDK to use the Relay Proxy.

Experimentation examples

We designed Experimentation to be accessible to a variety of roles within your organization. For example, product managers can use experiments to measure the value of the features they ship, designers can test multiple variations of UI and UX components, and DevOps engineers can test the efficacy and performance of their infrastructure changes.

If an experiment tells you a feature has positive impact, you can roll that feature out to your entire user base to maximize its value. Alternatively, if you don't like the results you're getting from a feature, you can toggle the flag's targeting off and minimize its impact on your user base.

Some of the things you can do with Experimentation include:

- A/B/n testing, also called multivariate testing

- Funnel conversion testing

- Starting and stopping experiments at any time so you have immediate control over which variations your customers encounter

- Reviewing credible intervals in experiment results so you can decide which variation you can trust to have the most impact

- Targeting specific groups of contexts or segments to experiments, refining your testing audience

- Measuring the impact of changes to your product at all layers of your technology stack

- Rolling out product changes in multiple stages, leveraging both Experimentation and workflows

You can use experiments to measure a variety of different outcomes. Some example experiments include:

- Testing different versions of a sign-up step in your marketing conversion funnel

- Testing the efficacy of different search implementations, such as Elasticsearch versus SOLR versus Algolia

- Tracking how features you ship are increasing or decreasing page load time

- Calculating conversion rates by monitoring how frequently end users click on various page elements

- Testing the impact of new artificial intelligence and machine learning (AI/ML) models on end-user behavior and product performance.

To learn more, read Designing experiments.

Analyze experiment results

Experiment data is collected on an experiment's Results tab, which displays experiment data in near-real time. To learn more, read Analyzing experiments.

As your experiment collects data, LaunchDarkly calculates the variation that is most likely to be the best choice out of all the variations you're testing. After you decide which flag variation has the impact you want, you can gradually roll that variation out to 100% of your customers with LaunchDarkly's percentage rollouts feature. To learn more about percentage rollouts, read Percentage rollouts.

You can export experiment data to an external destination using Data Export. To learn more, read Data Export.

Experimentation best practices

As you use Experimentation, consider these best practices:

- Use feature flags on every new feature you develop. This is a best practice, but it especially helps when you're running experiments in LaunchDarkly. By flagging every feature, you can quickly turn any aspect of your product into an experiment.

- Run experiments on as many feature flags as possible. This creates a culture of experimentation that helps you detect unexpected problems and refine and pressure-test metrics.

- Consider experiments from day one. Create hypotheses in the planning stage of feature development, so you and your team are ready to run experiments as soon as your feature launches.

- Define what you're measuring. Align with your team on which tangible metrics you're optimizing for, and what results constitute success.

- Plan your experiments in relation to each other. If you're running multiple experiments simultaneously, make sure they don't collect similar or conflicting data.

- Associate end users who interact with your app before and after logging in. If someone accesses your experiment from both a logged out and logged in state, each state will generate its own context key. You can associate multiple related contexts together using multi-contexts. To learn more, read Associate anonymous contexts with logged-in end users.

You can also use the REST API: Experiments (beta)