The Relay Proxy

Read time: 1 minute

Last edited: May 01, 2024

Overview

This topic explains what the Relay Proxy is and how to use it.

About the Relay Proxy

The LaunchDarkly Relay Proxy is a small Go application that runs on your own infrastructure. It connects to the LaunchDarkly streaming API and proxies that connection to clients within your organization's network.

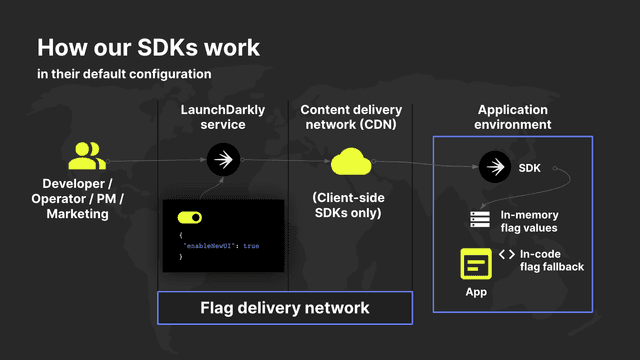

Here is a diagram illustrating how LaunchDarkly SDKs work when you are not using the Relay Proxy:

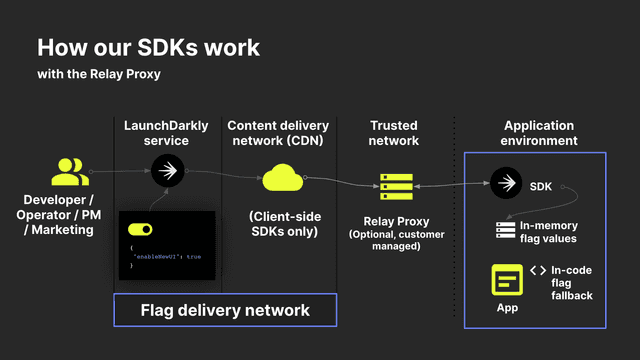

The Relay Proxy lets multiple servers connect to a local stream instead of making a large number of outbound connections to LaunchDarkly's streaming service (stream.launchdarkly.com). Each of your servers connects only to the Relay Proxy, and the Relay Proxy maintains the connection to LaunchDarkly. You can configure the Relay Proxy to carry multiple environment streams from multiple projects.

Here is a diagram illustrating how LaunchDarkly SDKs work when you are using the Relay Proxy:

The Relay Proxy is an open-source project supported by LaunchDarkly. The full source is in a GitHub repository. There's also a Docker image on Docker Hub.

If you are a customer on an Enterprise plan, Relay Proxy Enterprise has additional functionality available.

To help decide whether the Relay Proxy is appropriate for your configuration, read Relay Proxy use cases. To learn how to implement the Relay Proxy, read Implementing the Relay Proxy.